2365

2365

2017-10-17

2017-10-17

Apple says it has done extensive testing to ensure that FaceID treats everyone equally when it launches next month with the iPhone X.

FaceID has attracted a slew of security questions from the public wondering how Apple plans to keep biometric data private. U.S. Senator Al Franken also asked what Apple is doing to protect against racial, gender, or age bias in Face ID?

Apple finally responded to the senator’s question, providing a deeper look into the testing process.

To make Face ID incredibly accurate, Apple worked with diverse people around the world during testing. Cynthia Hogan, vice president of public policy for the Americas, released the following statement today:

“The accessibility of the product to people of diverse races and ethnicities was very important to us. Face ID uses facial matching neural networks that we developed using over a billion images, including IR and depth images collected in studies conducted with the participants’ informed consent. We worked with participants from around the world to include a representative group of people accounting for gender, age, ethnicity, and other factors. We augmented the studies as needed to provide a high degree of accuracy for a diverse range of users. In addition, a neural network that is trained to spot and resist spoofing defends against attempts to unlock your phone with photos or masks.”

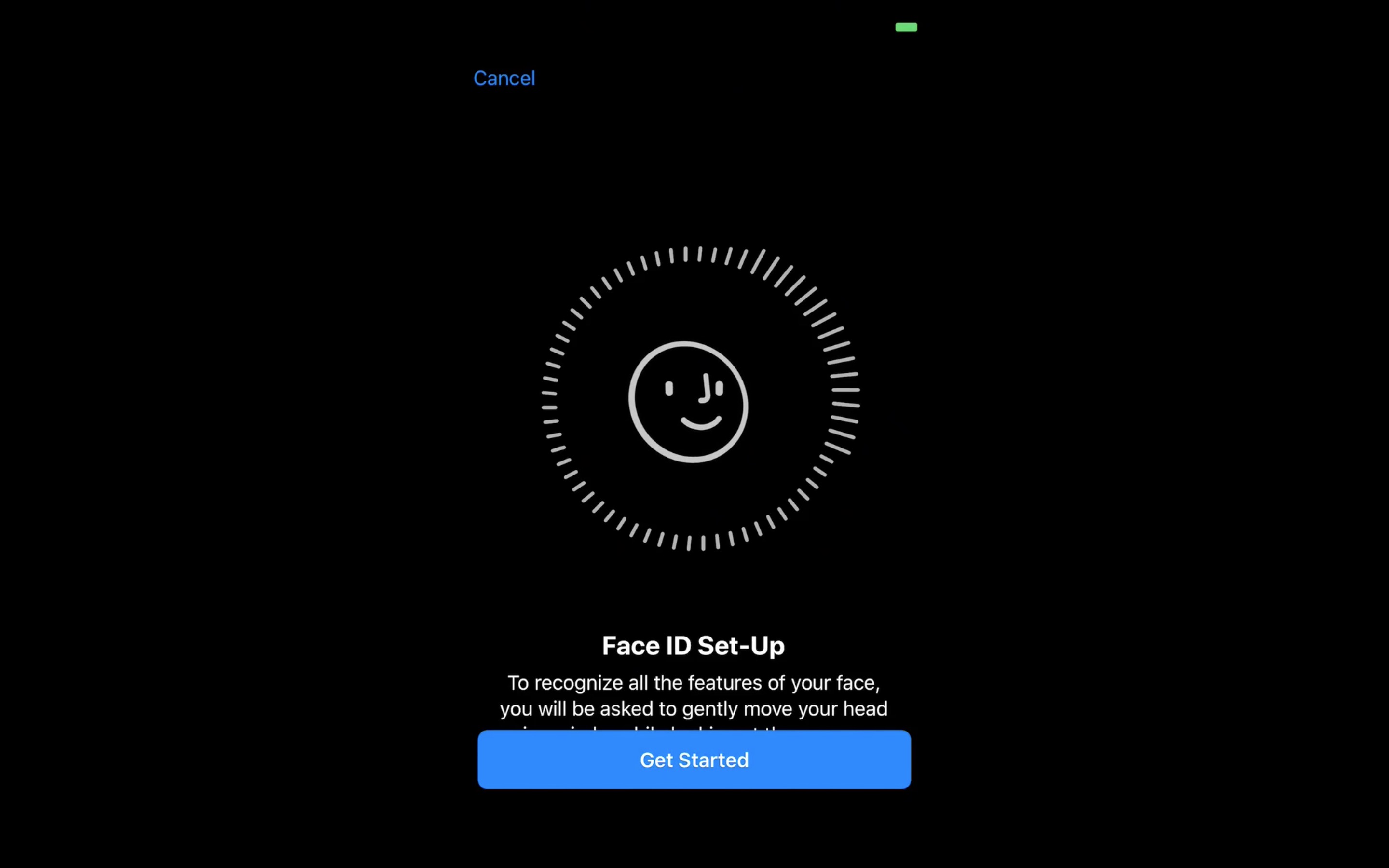

Apple’s Face ID feature will get its first big test on November 3, when millions of customers finally get it into their hands. The security feature for unlocking the iPhone X is powered by True Depth cameras that can detect a person’s face even in the dark.

Face ID replaces the traditional home button and Touch ID. A recent rumor claimed the tech could make its way to all iPhones next year.

Source: cult of mac