2682

2682

2017-11-23

2017-11-23

Apple’s ambitions to build a self-driving car have reportedly shifted gears over the years, but we know the company is focusing on the software side of the equation. This June, CEO Tim Cook said the iPhone maker is building autonomous systems that could power a range of different vehicles (rather than, say, working on its own Apple-branded SUVs). “We sort of see it as the mother of all AI projects,” said Cook.

Now, new research from the company’s machine learning team confirms this direction, with a paper published on pre-print server arXiv describing a mapping system that could be put to a range of uses, including powering “autonomous navigation, housekeeping robots, and augmented / virtual reality.” Though, to be clear, this is just academic research: it doesn’t indicate that Apple is working on these particular use-cases.

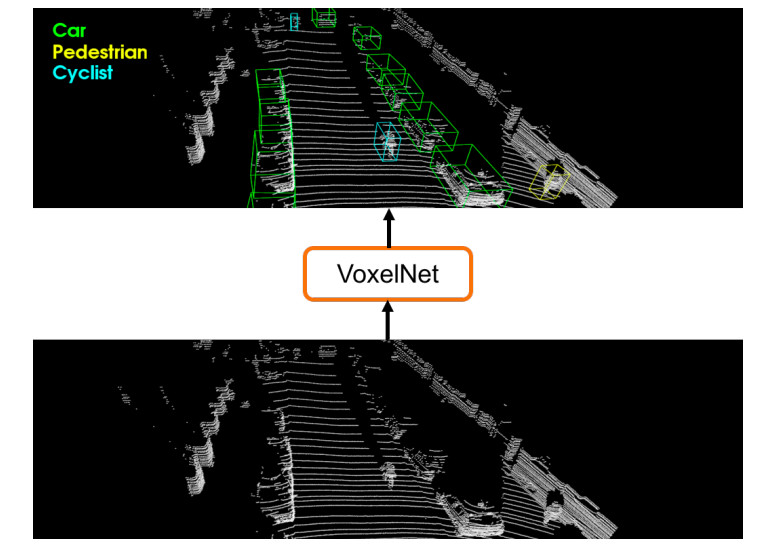

The system in question is called VoxelNet, and it’s all about improving the data we get from the eyes of most self-driving systems: LIDAR sensors. These components are integral to lots of autonomous vehicles, and work by bouncing lasers off nearby objects to build a 3D model of their surroundings. They offer better depth information than regular cameras, but produce patchy maps, with large sections often rendered invisible by objects blocking the laser’s path. This leads to maps that are “sparse and have highly variable point density,” as Apple’s researchers put it. In other words, it’s not good for safe self-driving.

To overcome this problem, engineers often deploy a number of discrete systems that first divide up the 3D LIDAR data into areas of interest (split into 3D pixels known as “voxels”) and then categorize what’s in it (identifying bikes, pedestrians, street signs, and so on). Apple’s VoxelNet basically compresses these processes into a single neural network, resulting in a system that’s more efficient than its predecessors. Researchers Yin Zhou and Oncel Tuzel benchmarked VoxelNet’s performance against a number of rival programs, and it handily outperformed them.

Is it groundbreaking research for self-driving cars? Well, no, not really. Roland Meertens, a Dutch engineer who builds computer vision systems for autonomous vehicles, said the results were impressive, but pointed out that other firms have long been using different methods to overcome LIDAR’s shortcomings — including combining its 3D data with feeds from regular cameras.

“Tesla, for example, does not use LIDAR at all, but their vehicles are pretty good at lane-keeping,” Meertens told The Verge. He adds that although VoxelNet could potentially be used for self-driving cars (including Apple’s) it will be “mainly interesting for other researchers working with similar data solving different problems in other spaces.”

That makes sense considering Apple has only published it on an academic server. More interesting, perhaps, is the fact it’s public at all. Apple’s notoriously secretive corporate culture means it’s not as forthcoming about its AI work as rival tech firms like Google and Facebook. This has been a drawback for its work in AI, a community where publishing top-quality research helps attract top-quality talent. Apple has made some moves to open up a little, including starting a blog this July that spotlights key areas of its work.

So far, the blog has looked at a number of AI tools underpinning important Apple products, including face identification (for Face ID) and speech recognition (for Siri). But the company has yet to publish any research on autonomous systems on its blog — including this paper. It seems Apple is happy to conduct research on how to create detailed maps, but isn’t keen to plot out a public course for its own self-driving ambitions just yet.

Source: theverge