2477

2477

2017-11-22

2017-11-22

Tim Cook has publicly commented on Apple’s work in autonomous systems before, and a new research paper from two Apple research scientists dives deeper into the company’s efforts. The paper explains how Apple is using a combination of LiDAR with other technologies for 3D object detection that represents the future.

The paper is authored by Yin Zhou, an AI researcher at Apple, and Oncel Tuzel, a machine learning research scientist at the company. Both have joined Apple within the last two years. Below are just some broad highlights, read the full paper here.

The paper explains how accurate detection of objects in 3D point clouds can be used in autonomous navigation, housekeeping robots, and more:

Accurate detection of objects in 3D point clouds is a central problem in many applications, such as autonomous navigation, housekeeping robots, and augmented/virtual reality. To interface a highly sparse LiDAR point cloud with a region proposal network (RPN), most existing efforts have focused on hand-crafted feature representations, for example, a bird’s eye view projection.

In this work, we remove the need of manual feature engineering for 3D point clouds and propose VoxelNet, a generic 3D detection network that unifies feature extraction and bounding box prediction into a single stage, end-to-end trainable deep network.

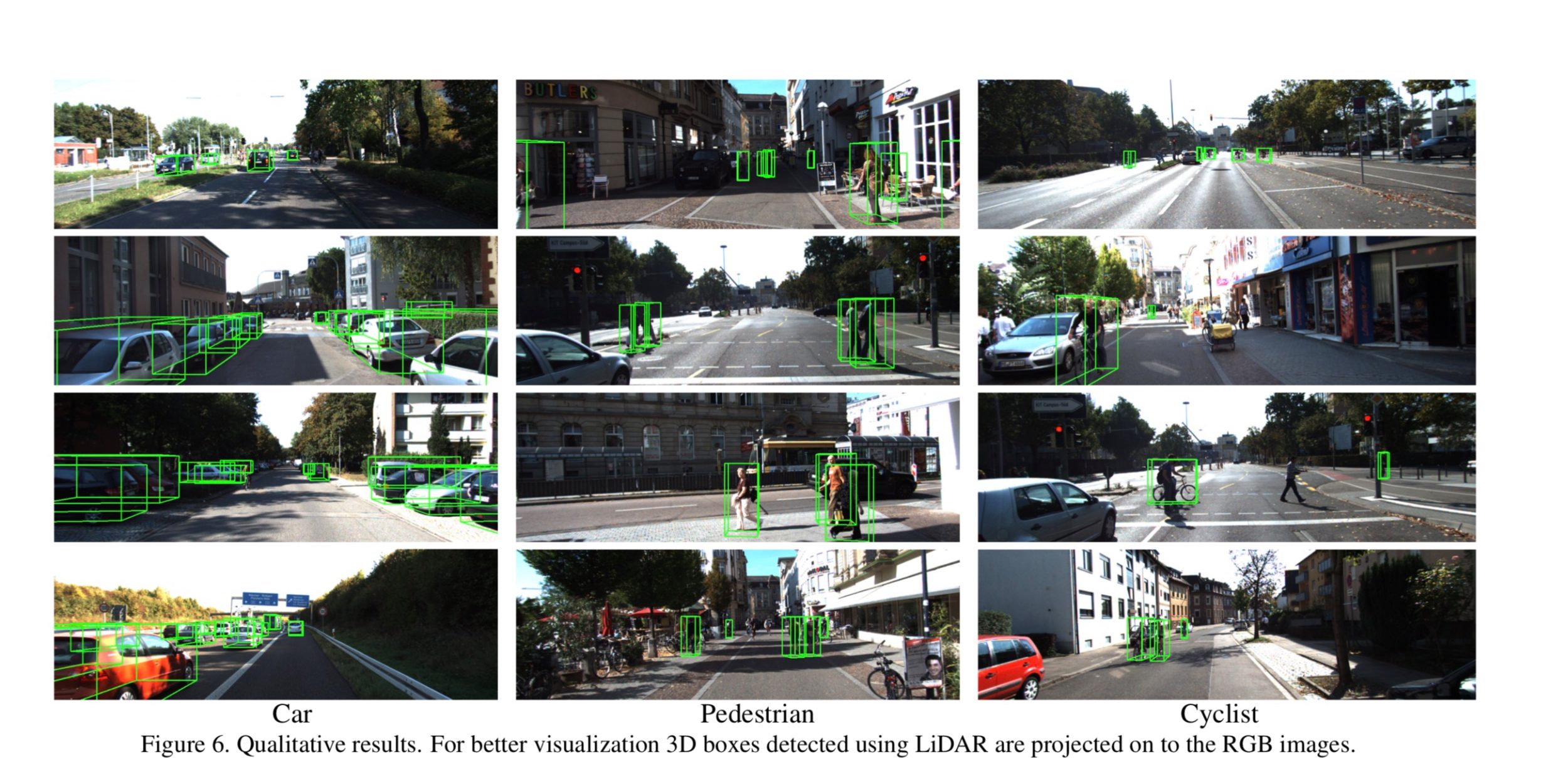

Furthermore, it shows how the aforementioned technology can be used in LiDAR-based car, pedestrian, and cyclist detection benchmarks. Specifically, the paper presents an alternative to hand-crafted feature representations in LiDAR-based 3D detection:

Zhou and Tuzel believe that their experiments represent the future of 3D object detection, providing better results than other technologies when detecting cars, cyclists, and pedestrians “by a large margin.”

The full paper is definitely worth a read and offers a rare insight into Apple’s work on autonomous systems. Check it out here.

Source: 9to5mac