1139

1139

2024-06-14

2024-06-14

Apple's Eye Tracking tech offers a new way for iPhone and iPad owners to interact with their devices. The first appearance in iOS 18 demonstrates how powerful it can be to some users.

In May, Apple revealed some of the accessibility features arriving in iOS 18 and iPadOS 18, and included eye tracking as one of them.

Eye Tracking is one of the primary ways that the Apple Vision Pro functions. It's used to track what the user is looking at, so screen elements can be selected and used, with a pinch of the fingers to confirm.

The version found in iOS 18 is the same concept, but for a much smaller screen.

This instance of Eye Tracking is intended to help users with physical disabilities. Specifically, if you can't easily interact with a touchscreen display or are unable to easily navigate iOS or iPadOS with a finger, this offers a solution.

Simply put, Eye Tracking is able to determine where a user's eyes are looking at on the screen.

It does so without requiring any extra equipment. All it requires is the front-facing camera on an iPhone or iPad, coupled with on-device machine learning.

Set-up and Calibration

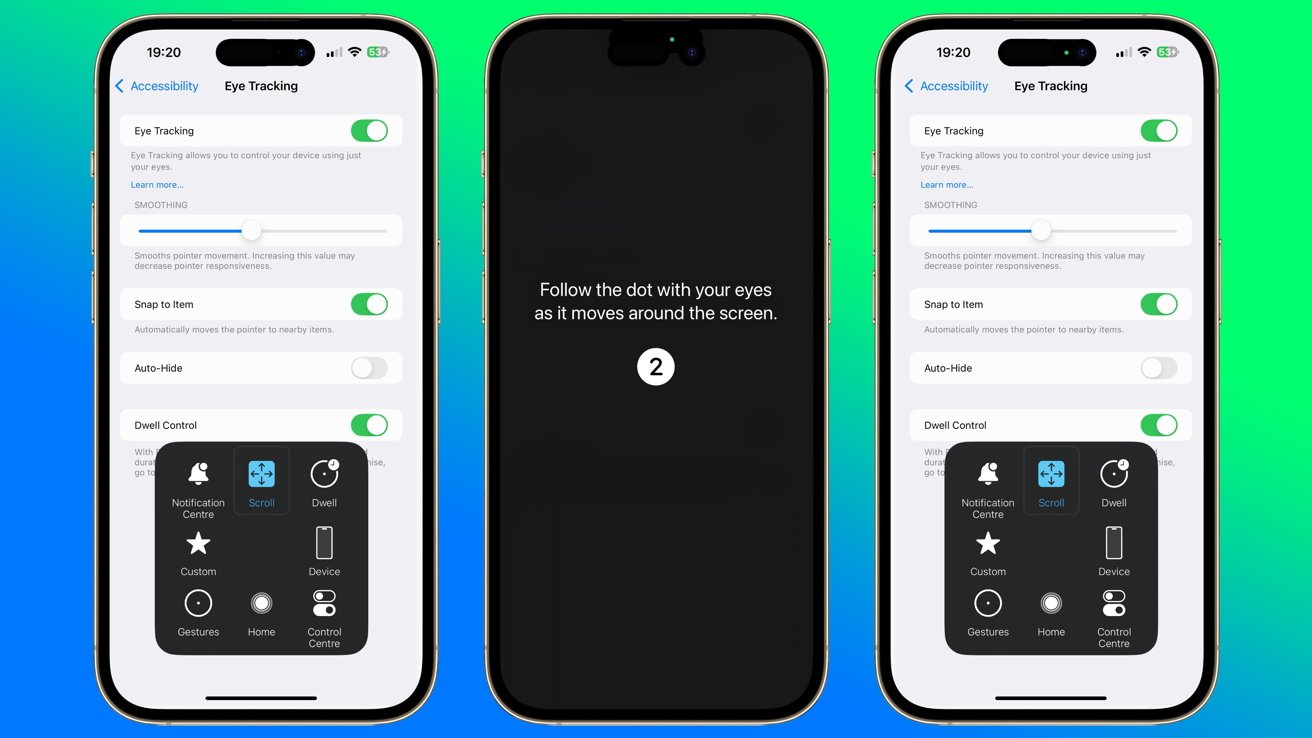

Setting up Eye Tracking takes about a minute to complete. It's accessible in the Settings app, under Accessibility, then under the Physical and Motor group, select Eye Tracking, then the Eye Tracking toggle.

Once enabled, a pop-up notice appears, instructing users to follow the dot with their eyes. A dot then moves around the display, stopping at strategic points to force the user to look at that position.

After the trip around the screen has completed, the user is taken back to the Settings menu.

The first change they will see is a black dot that hovers around the screen in the general location of their gaze. This acts as a pointer for the user, replacing their finger.

Dwell Control and customizing control

When the Eye Tracking is enabled, a setting called Dwell Control is also turned on.

While a user can force a cursor to move by looking at different parts of the screen, they cannot easily select the item by looking alone. Dwell Control will select items on the screen for the user, by the user maintaining their gaze and not shifting it for a few seconds.

Dwell Control can be disabled completely, or customized using settings with Assistive Touch settings.

To further help users, Smoothing can be adjusted. This specifically alters how responsive the pointer is to the user's eye movement, with it able to increase or decrease the sensitivity.

For users, this can mean the cursor doesn't move that much when eyes briefly flit to another point for a second. It also can make it easier for a user to zero in on what they want to select on the screen.

There's also an option for Snap to Item. As the name proposes, the pointer will tend to gravitate towards the nearest selectable user interface section.

This makes it extremely quick to make selections from menus for users, as it reduces the amount of accuracy required to select it.

A selectable dot can also be expanded to show a selection of other features, including adjustments to Dwell Control, access to Notification Center, and Control Center. This is an addition that makes it easier to access key elements of iOS 18, using a tougher than usual control scheme.

Tricky but useful

It's early days into the beta so the accuracy of the feature isn't entirely perfect, but it gets close to where I'm looking at on the display. At times the cursor does overshoot and go further than where my gaze lands, but purposefully changing where I'm staring to correct it isn't too hard.

The position of your head relative to the iPhone is also important, as any movement of your head from where it was calibrated can affect how it perceives your gaze. Similarly, moving the iPhone can cause the same problem when held.

Ideally, you should use it while positioned about 1.5 feet to 2 feet away from the display at the time of calibration. The iPhone should be stood on a stand and ideally not moved around.

The concept certainly shows promise in what it could do to users with mobility issues. For most others who usually use their fingers to interact with the iPhone, it's less useful, but still an interesting idea.

Source: AppleInsider